We Thought We Knew Our Own CS Process. A Week of Discovery Proved Us Wrong.

What eight interviews taught us about our own CS team before we wrote a single line of AI code.

I’ve spent this year learning and evangelizing about AI transformation in Customer Success (CS). I’ve spoken with and written about leaders pushing the frontier, like Colin Slade at Cloudbeds, whose team built more than 150 AI agents to support CS at scale (read his story it’s incredible!).

Writing about what’s possible is one thing. Building it yourself is another. At some point, you have to eat your own words. We at Gainsight wanted to beef up our own AI transformation, not just talk about it.

So I made leading that effort a top priority in my personal OKRs. In early November we assembled a CS working group and started by understanding which frictions we faced the most as a CS org.

We came to the consensus that Instance Reviews (IRs) would be the best place to start. That wasn’t obvious to me at first. I was relatively new to the role and didn’t even know what IRs were yet—but I learned quickly.

The Role of Instance Reviews in Customer Success

Instance Reviews are one of the most important activities a Customer Success Manager (CSM) can do. They’re deep assessments of how customers are using Gainsight. They answer the questions every customer has: Am I getting the most out of this? What should I improve? What best practices am I missing?

They influence Quarterly Business Reviews (QBRs) and ultimately drive renewals, catch risk early, and identify expansion opportunities.

The problem? Few people on the team felt comfortable doing them. Instance Reviews require a level of technical analysis that not every CSM is trained for, and without a clear, repeatable approach, the work felt intimidating rather than empowering.

Roman Dalichow, our VP of Customer Success, put it bluntly: “A whole swath of CSMs shrivel up when they hear ‘instance review’.”

We knew we had to fix this, and AI was an obvious place to look. But in my industry conversations, I’d seen too many AI projects fail to make it past the demo phase. We didn’t want to throw technology at a problem we didn’t fully understand.

So we brought in Method Garage.

Why We Didn’t Do This Alone

We knew what we wanted to solve, but we also knew we didn’t want to spend months rediscovering things others had already learned. This work needed momentum, pattern recognition, and a level of experience we didn’t yet have internally.

That’s where Method Garage came in.

They’re a long-standing partner of Gainsight, and what makes them effective is what I think of as triple fluency. (1) They understand Customer Success because they’ve spent the last decade working with CS teams at enterprises like VMware, DocuSign, and Salesforce. (2) They understand what’s actually possible with AI today because they build real systems that run in production. (3) They’re builders first, focused on writing code rather than producing slide decks.

We brought Method Garage in to run a Blueprint and Prototype Sprint. At HYPER SPEED. One week to map how the work actually happened. Two more weeks to build something real.

Here’s what we learned.

What I Thought I Knew

I came in with a set of assumptions about what applying AI to Instance Reviews would require.

At the time, I believed:

Instance Reviews are a single end-to-end process we needed to automate

Nobody had cracked this yet, so we’d be starting from zero

We’d need a data warehouse or complex integrations to feed the AI

The payoff would be efficiency: 8-12 hours down to under an hour

I was wrong on almost all of it.

Finding #1: IRs Involve Two Completely Different Activities

We’d been using the term “Instance Review” as if it described a single, end-to-end process. What we quickly learned is that it’s actually two distinct jobs that happen to share a name.

The first layer is technical analysis. This is about understanding what exists in the customer’s Gainsight instance and how it’s configured:

How is the customer’s Gainsight instance set up?

Which features are turned on and which are unused?

How many rules and reports are in place?

Are scorecards current or stale?

Are they using Journey Orchestrator only for email, or have they built more sophisticated, multi-channel programs?

The second layer is the value narrative. This is where the technical details are synthesized into something meaningful for a customer conversation:

Is this configuration actually working?

Is the customer getting full value from their investment?

What should they improve or clean up?

Which best practices are they missing?

What’s the “so what” that matters to the people on the other side of the table?

These two layers require very different skills. Some CSMs are exceptional at telling the story and connecting insights to outcomes, but feel less confident navigating a complex configuration. Others can quickly read an instance and spot issues, but struggle to translate that analysis into a clear, customer-ready narrative.

We had been trying to automate one process. What we really needed was to support two.

Finding #2: We Had an Elliot

It turned out we didn’t have to look far. One of our Principal CSMs, Elliot Hullverson, who’s been at Gainsight for more than eight years, had already figured out how to make Instance Reviews both fast and effective.

He can complete the technical analysis portion in about 15 minutes. From there, he still spends time building the value narrative and creating the deck, which brings the total to a few hours for him.

For most others, the same work takes ten to twelve hours.

Elliot was proof the problem of preparing Instance Reviews was solvable. We just hadn’t learned from him nor scaled his process.

Our broader lesson was that every team has someone (or some combination of people) who’ve figured out pieces of the problem. Sometimes the gold is buried across three different heads. Method Garage is exceptionally good at finding these people and extracting what’s in their brains. When the expertise is fragmented across multiple people, that’s even harder to do yourself.

Finding #3: The Data Was Already There

I see companies constantly falling into the trap of, “We can’t do AI until we get our data warehouse running. We need to centralize everything. We need to fix our data nightmare first.”

Guess what? Companies have been trying to fix their data problems for decades. If you wait for perfect data infrastructure, you’ll never build anything.

I can say from personal experience: it didn’t matter.

Method Garage found data from various places that nobody was even using. Our CSMs were manually logging into customer instances and reviewing them screen by screen by screen, even though much of that same data was sitting in a 25-megabyte Excel export that our admin tool generates.

We assumed we’d need a data warehouse, complex API integrations, and ample engineering time to build data pipelines.None of that was true. The config export plus usage data from our Gainsight PX integration gave us 80-90% of what we needed.

Before you scope out a data infrastructure project, take inventory. The data you need might already exist in an export you’ve never looked at.

Finding #4: Time Savings Wasn’t the Real Value

Our original pitch centered on efficiency. By applying AI, work that once took 8–12 hours could be completed in under an hour.

But as discovery progressed, it became clear that time wasn’t the real constraint. The deeper issue was that Instance Reviews were cognitively heavy, context-switching-intensive, and emotionally draining. They required deep product knowledge, data interpretation, narrative construction, and executive-ready storytelling—all layered on top of an already overloaded CSM role.

The process was so painful that CSMs weren’t doing them at all.

That reframed the problem entirely. During a working session, I put it this way: “This is no longer about faster Instance Reviews. This is about going from zero to one. It’s the difference between doing them and not doing them.”

The true value came from enabling a task that was effectively non-existent.

The temptation with AI is to focus on efficiency gains, shaving time off tasks that already exist. This framing misses the more important shift.

AI can make an enormous impact by making previously impractical work feasible. When effort drops below the threshold of resistance, teams stop avoiding the work and start building it into their rhythm. At that point, AI is no longer an accelerator. It becomes an enabler of entirely new habits.

The Blueprint Process

The first week of our Blueprint sprint was focused on understanding how Instance Reviews actually happened, not how we assumed they worked.

With Method Garage’s help, we ran eight internal interviews with everyone from individual CSMs to our VP of Customer Success. Instead of asking, “What do you need?”, we asked something more revealing: “Show me how you do this, and explain why.”

That distinction mattered. Watching the work in real time surfaced details that never appear in documentation.

Doing this internally would have taken months. It was Q4, calendars were packed, and context switching would have slowed everything down. Having a dedicated team focused on discovery created momentum we wouldn’t have had otherwise.

Method Garage helped us get past surface-level explanations and into the real decision-making behind the work. Why did you choose that? What were you looking for? How did you know when to move on?

Those answers live in people’s heads, especially your strongest practitioners. They’re rarely written down, and they’re exactly what needs to be captured to automate the work responsibly.

The work done in that week produced the following results:

They found internal experts like Elliot who had already developed repeatable approaches.

They mapped the real workflow, not the idealized version.

They surfaced friction across the work, from technical analysis to turning insights into a clear customer story.

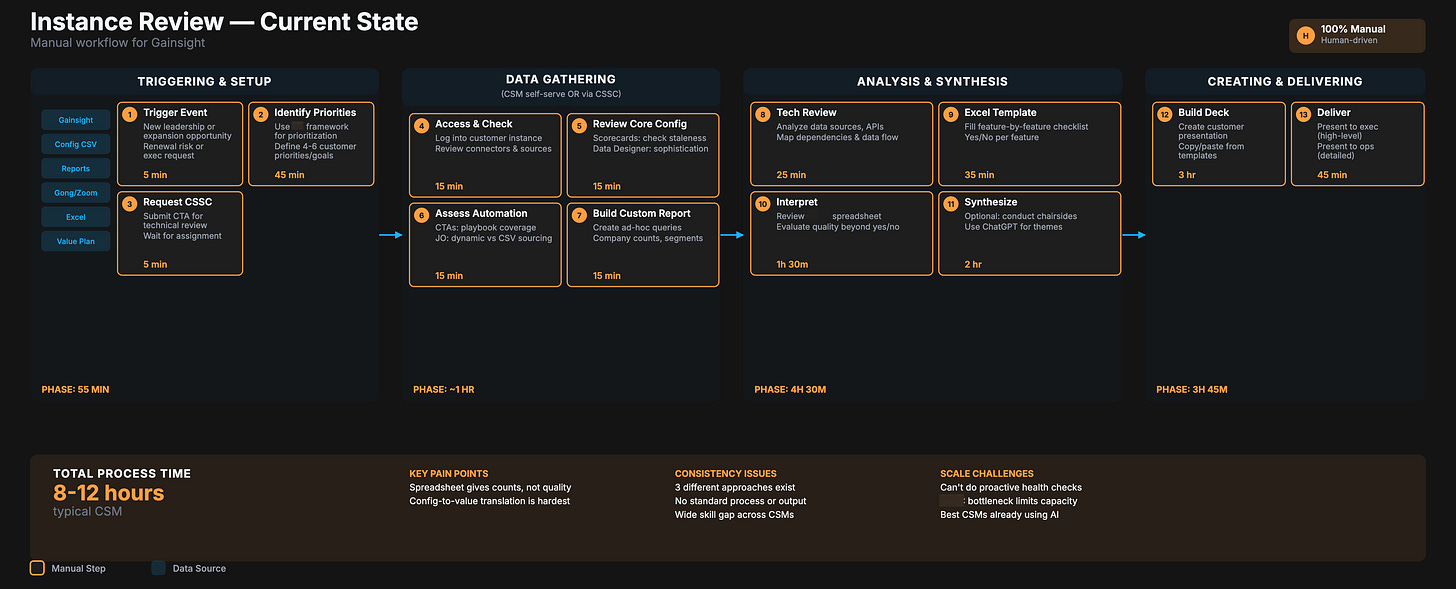

We left with a current-state workflow diagram, a future-state design showing where AI could help, and a clear plan for what to prototype next.

What We’re Planning to Prototype

With the current state mapped, we moved into the future-state design phase.

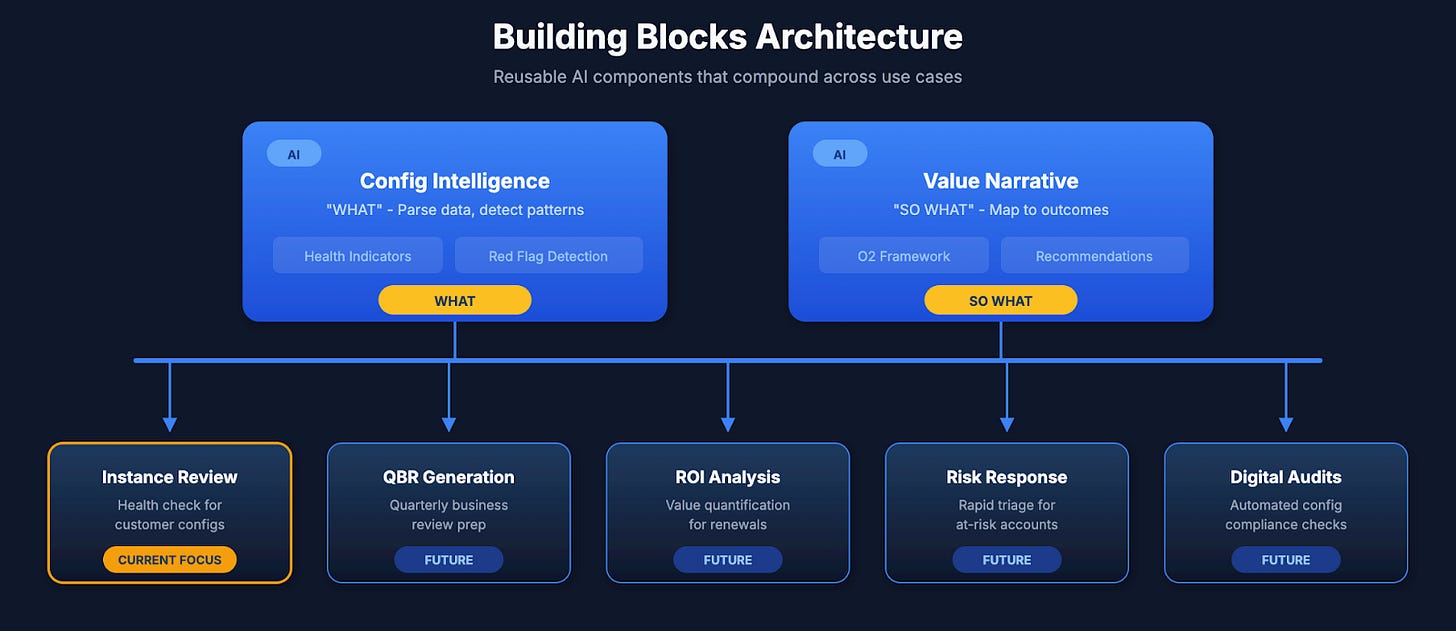

Here’s what changed from our original thinking: we stopped thinking about “Instance Review Automation” as a single tool. The discovery showed us this is two distinct jobs with different skill requirements.

So we’re designing two separate modules that solve each job (Config Intelligence and Value Narrative), and that can be reused for other CS workflows down the road.

More on this in Part 2 of our story coming soon....

Lessons for Other Leaders

What we learned in that first week applies beyond Gainsight. If you’re thinking about automating a CS workflow (or any knowledge work), here’s what I’d take from this experience.

1. Know Your Real Processes

We’re a Customer Success company, and many of our CS processes are well-documented, consistent, and repeatable, just as you’d expect. But not all of them. Instance Reviews were one of those gaps. They lived largely in tribal knowledge and individual habits, which meant we didn’t understand the process at the level of detail required to automate it.

It’s a common reality you have to confront before you try to apply AI.

2. Find Your Elliot(s) Before You Build

I talk about this quite often. Someone on your team, or more often a small group of people, has already solved pieces of the problem manually. Find them. Learn from them. Bring them into the design process early.

If you automate without them, you’ll automate the wrong thing.

When expertise is scattered across multiple brains, extracting it becomes even harder. That’s where an experienced partner can make a real difference by helping surface what already exists.

3. Ask to See the Work and Why

People are good at describing aspirational processes. Observation reveals how work actually happens.

But observation alone isn’t enough. You also have to ask why. Why did you take that step? How did you make that decision? What were you looking for at that moment?

The reasoning behind those choices is rarely documented, yet it’s exactly what needs to be captured if you want an agent to do more than automate the surface.

One thing that helped us was mapping the entire workflow visually. Four phases. Thirteen steps. Laid out end to end. That diagram became an alignment tool. People who weren’t close to the Instance Review process could finally see how it really worked and how much it varied from person to person.

4. Challenge Your Framing Early

We originally framed this as a time-savings problem. Eight to twelve hours down to under an hour.

What we learned is that it was really a capability problem. The biggest win wasn’t doing the work faster. It was enabling work that often wasn’t happening at all. That shift changes the entire business case.

5. Start With the Data You Have

The instinct to fix your data infrastructure before starting an AI project has derailed more initiatives than bad models ever have.

Take inventory of what already exists. You’ll probably find you can get much further than you expect without waiting for perfection.

6. Blueprint Before You Build

That first week of interviews saved us from building the wrong thing. It also created buy-in from the people who would actually use the solution. Elliot will champion this work because he helped shape it.

Discovery isn’t a delay. It’s de-risking.

What’s Next

With help from Method Garage, we’re building the prototype over the next two weeks.

Yes, I said two weeks. Sounds crazy, I know.

One week to understand a process that’s been running (and frustrating people) for years. Two more weeks to have something working. Our focus is to test the process against real customer data and compare outputs to what Elliot produces manually. We’ll see if the AI can actually find the same insights he finds.

To be clear, a working prototype doesn’t mean the work is finished. It shows the path is viable, reduces risk before a full build, and creates momentum internally.

After the prototype, we’ll make a clear go or no-go decision on building this for real at a production grade. From there, we’ll iterate as we roll it out to more CSMs, knowing it won’t be perfect on day one. Real users always surface real edge cases, and improvement comes from working through them.

If the prototype works, we’ll know the path is viable. We’ll have evidence instead of theory, and a much clearer picture of what it actually takes to deliver Elliot-level capability to every CSM.

Part 2 is coming soon, where I’ll share what happened when we actually built it.

🌱 Josh

SVP, Strategy & Market Development @ Gainsight

Want to compare notes with other Customer Success leaders experimenting with AI and agentic workflows? Drop a comment below or reach out. I’d love to hear what you’re building.

A huge thank you to Method Garage for partnering with us on this work. Their ability to uncover real workflows, surface hidden expertise, and turn messy reality into something buildable made this progress possible. If you’re exploring how to apply AI to complex, human-heavy processes, they’re worth knowing.

![[Un]Churned by Gainsight](https://substackcdn.com/image/fetch/$s_!7AoO!,w_40,h_40,c_fill,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ffe2167ac-0bcf-4575-9712-8d5ef3588851_300x300.png)

![[Un]Churned by Gainsight](https://substackcdn.com/image/fetch/$s_!hKlf!,e_trim:10:white/e_trim:10:transparent/h_72,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe14b36dd-52b9-48a3-9f93-3f6a459d55ff_1344x256.png)